CSE/EE 486: Computer Vision IComputer Project Report # : Project 1Image Transformations and Interpolation MethodsGroup #4: Isaac Gerg, Adam Ickes, Jamie McCullochDate: October 1, 2003 |

|||

|

|

|||

| A. |

Objectives

|

||

| B. |

Methods There is one 'M' file for this project. It contains code for the image translation and interpolation. project1.m contains six parts.

1. Creation of Translation Matrix, T. Executing project1.m from Matlab At the command prompt enter:

|

||

| C. |

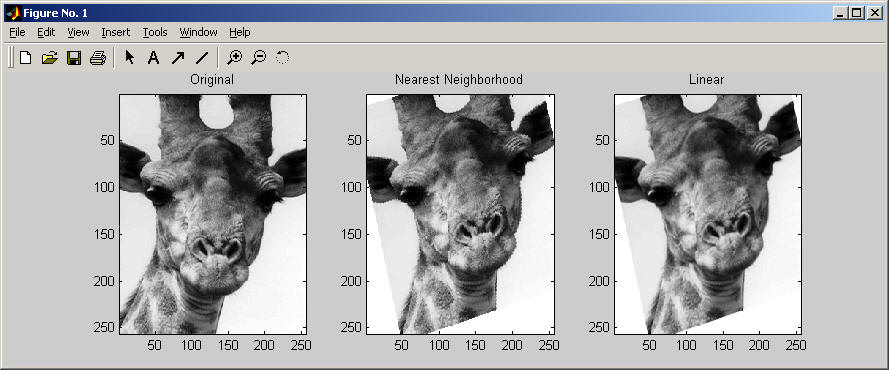

Results Image file names in parentheses.

Part 1

Part 3 Part 4 Part 5 Based on observation, the edges in the image were not smoothed. There were some slightly jagged lines that were noticed in the rotated image that were not present in the original image. Part 6

where FLOOR is the Matlab floor function. Based on observation, the edges in the image were noticeably smoother than what was observed using nearest neighborhood interpolations. This was expected as a weighted pixel intensity was used when integer coordinates were not realized in calculation.

Summary

|

||

| D. |

Conclusions Using homogenous coordinates, one can create matrix transformations simply by multiplying matrices together. There are many different methods to scale, rotate, etc. an image. Due to the discreteness of our image matrix, some visual information may be misinterpreted. This can be corrected by utilizing different interpolation methods. Nearest neighborhood interpolation yields a suitable image. However, it is not as refined as an image created using linear interpolation. Linear interpolation would be used in any type of image rotation where smoothness of the image must be preserved. Translation and rotation functions are used commonly in elementary image editing. |

||

| E. |

Appendix

Source Code

Time Management |

||

|

|

|||