CSE/EE 486: Computer Vision IComputer Project Report # : Project 5CAMSHIFT Tracking AlgorithmGroup #4: Isaac Gerg, Adam Ickes, Jamie McCullochDate: December 7, 2003 |

|||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||

| A. |

Objectives

|

||||||||||||||||||||||||||||||||||||||||||||||||||

| B. |

Methods There are two 'M' file for this project. All coordinate references are defined as follows: -X ^ Where the + is the top left hand corner of image. part1.m 1. Converts 14 image pairs of a video sequence to

grayscale. camshift.m 1. Implementation of the CAMSHIFT algorithm for tracking a hand in a video sequence. Executing this project from within Matlab At the command prompt enter:

|

||||||||||||||||||||||||||||||||||||||||||||||||||

| C. |

Results

The video sequence analyzed in this experiment is

located here. The first 14 pairs of frames in the video sequence were absolutely differenced to study their effects. It appears that for this video sequence, tracking using this method would not yield good results. The hand object is not "closed" in all the differenced scene. Furthermore, thresholding would have to be done to remove some of the background noise. This is caused from small movements of the person and illumination and reflection differences. In a tracking method such as this, one may want to utilize morpholoply techniques in an attempt to bound the object. Once and object is bound, its centroid can be computed. This enables us then to track the object. In busy scenes with much variation in movement and light, this method becomes very non-useful. This method is really only useful for transient optical flows. If the pixels intensities do not change, no motion will be visible in the difference frames.

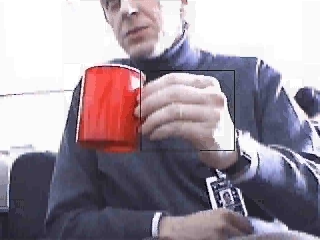

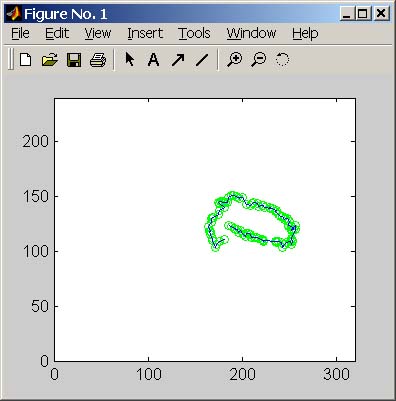

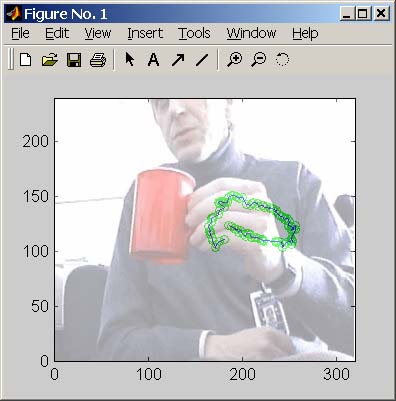

CAMSHIFT Algorithm The CAMSHIFT algorithm is based on the MEAN SHIFT algorithm. The MEAN SHIFT algorithm works well on static probability distributions but not on dynamic ones as in a movie. CAMSHIFT is based principles of the MEAN SHIFT but also a facet to account for these dynamically changing distributions. CAMSHIFT's is able to handle dynamic distributions by readjusting the search window size for the next frame based on the zeroth moment of the current frames distribution. This allows the algorithm to anticipate object movement to quickly track the object in the next scene. Even during quick movements of an object, CAMSHIFT is still able to correctly track. The CAMSHIFT algorithm is a variation of the MEAN SHIFT algorithm. CAMSHIFT works by tracking the hue of an object, in this case, flesh color. The movie frames were all converted to HSV space before individual analysis. CAMSHIFT was implemented as such: The initial search window was determined by inspection. Adobe Photoshop was used to determine its location and size. The inital window size was just big enough to fit most of the hand inside of it. A window size too big may fool the tracker into tracking another flesh colored object. A window too small will mostly quickly expand to an object of constant hue, however, for quick motion, the tracker may lock on the another object or the background. For this reason, a hue threshold should be utilized to help ensure the object is properly tracked, and in the event that an object with mean hue not of the correctly color is being tracked, some operation can be performed to correct the error. For each frame, its hue information was extracted. We noted that the hue of human flesh has a high angle value. This simplified our tracking algorithm as the probability that a pixel belonged to the hand decreased as its hue angle did. Hue thresholding was also performed to help filter out the background make the flesh color more prominent in the distributions. The zeroth moment, moment for x, and moment for y were all calculated. The centroid was then calculated from these values. xc = M10 / M00; yc = M01 / M00 The search window was then shifted to center the centroid and the mean shift computed again. The convergence threshold used was T=1. This ensured that we got a good track on each of the frames. A 5 pixel expansion in each direction of the search window was done to help track movement. Once the convergent values were computed for mean and centroid, we computed the new window size. The window size was based on the area of the probability distribution. The scaling factor used was calculated by: s = 1.1 * sqrt(M00) The 1.1 factor was chosen after experimentation. A desirable factor is one that does not blow up the window size too quickly, or shrink it too quickly. Since the distribution is 2D, we use the sqrt of M00 to get the proper length in a 1D direction. The new window size was computed with this scaling factor. It was noted that the width of the hand object was 1.2 times greater than the height. This was noted and the new window size was computed as such: W = [ (s) (1.2*s) ] The window is centered around the centroid and the computation of the next frame is started.

Summary

|

||||||||||||||||||||||||||||||||||||||||||||||||||

| D. |

Conclusions Object tracking is a very useful tool. Object can be tracked many ways including by color or by other features. Tracking objects by difference frames is not always robust enough to work in every situation. There must be a static background and constant illumination to get great results. With this method, object can be tracked in only situations with transient optical flow. If the pixel values don't change, no motion will be detected. The CAMSHIFT is a more robust way to track an object based on its color or hue. It is based after the MEAN SHIFT algorithm. CAMSHIFT improves upon MEAN SHIFT by accounting for dynamic probability distributions. It scales the search window size for the next frame by a function of the zeroth moment. In this way, CAMSHIFT is very robust for tracking objects. There are many variables in CAMSHIFT. One must decide suitable thresholds and search window scaling factors. One must also take into account uncertainties in hue when there is little intensity to a color. Knowing your distributions well helps to enable one to pick scaling values that help track the correct object. In any case, CAMSHIFT works well in tracking flesh colored objects. These object can be occluded or move quickly and CAMSHIFT usually corrects itself. |

||||||||||||||||||||||||||||||||||||||||||||||||||

| E. |

Appendix

Source Code Movies

The hand tracking movies have the follow format parameters:

Time Management |

||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||